Just when you thought that the terminal can’t get any niftier, here is another banger command that makes life easier. wget is a CLI utility that can download files from the web. wget can easily download files, websites, and even entire FTP directories. It supports HTTP, HTTPS, and FTP protocols. Unlike using a browser to download files, wget doesn’t need to be babysat, as it can work in the background retrieving files non-interactively. In today’s post, we’ll explore some of the useful features of wget and walk through a few examples to get started.

Installing wget

wget comes pre-installed on most distros* but if for some reason you don’t have it installed on your system you an use one of the following commands to get that taken care of:Arch

sudo pacman -S wget

Ubuntu

sudo apt install wget

RHEL

sudo yum install wget

wget Syntax

Personally I’ve used wget primarily in the context of installing CMSes on web servers so let’s try doing that! Below we will download the latest version of WordPress in the current present working directory.

wget https://wordpress.org/latest.zip

Pretty straight forward right? Simply put wget in front of the URL of the file you want to download. Much like other commands we’ve come to know and love, you can also perform multiple download operations at the same time, by seperating the targets with a space:

wget https://URL1 https://URL2 #and so on

wget iceberg, as it is a very robust utility.wget options

Continuing Downloads

Much like a dedicated browser download manager, you can continue downloads if they are interrupted for any reason. Pass the -c or --continue switch to wget and it will attempt to re-download the file where it left off.

wget -c https://wordpress.org/latest.zip

I’ve found that it doesn’t really matter where the option is placed, either before or after the target URL responds correctly.

wget continued at the point where the download was interrupted and resumed from that point. Note that the progress bar visually changed to indicate this.Mirroring a site

Let’s try something more exotic. wget can do way more than download single files, it can also follow links in HTML & CSS recursively. That means with the right parameters you can mirror an entire site to your hard drive for offline viewing. Now before we try this out, let’s create a new directory. This is done because when we point wget at a URL, oftentimes we end up downloading a lot of files, so to keep things tidy we’ll just work out of a directory called sandbox:

mkdir wget-sandbox && cd wget-sandbox

Now that we have a sandbox to experiment in we can safely run the command below, which uses -m or --mirror:

wget -m https://rogerdudler.github.io/git-guide/

Be patient as some sites, depending on complexity, will take longer periods of time to complete.

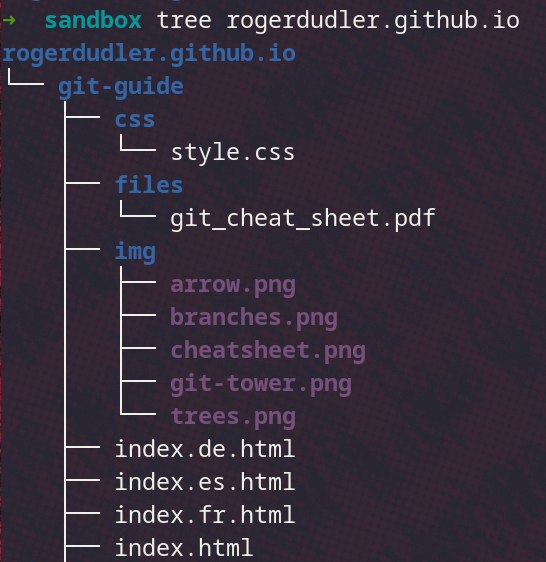

Once the site is finished mirroring you’ll have a directory with the name of the site, and all its resources located neatly inside of it:

Pretty radical right? In one command we can download an entire website (or at least the static version of it). Just for the sake of doing my duty, I must inform you that -m is short-hand for the following options:-r -N -l inf --no-remove-listing.

-r: recursively download files.-N: time stamp the files.-l inf: sets the maximum depth for files to be retrieved from

their directories. By default the max is 5, but here we set it to infinity

to mirror the site.--no-remove-listing: this keeps FTP listing

files, useful for debugging purposes.

While FTP is fine and dandy, from a security standpoint

please don’t use FTP, I only mention it here for the sake of completeness:

wget sftp://demo:password@test.somewhere.net:22/

Parting ways

That was wget in a nutshell, another essential CLI command to try out! I can definitely see wget quickly becoming a webdev or archivist’s best friend with its mirroring feature. Imagine the scripting possibilities! That alone is worth taking a crack at wget a file or two. As always thanks for stopping by! Now go forth and download!